In this age of massive language models, it seems like everyone's talking about scaling, GPUs, trillion-parameter networks, and mind-blowing benchmarks. But the real deal often happens behind the scenes, where progress is made by making models smaller, cheaper, faster, and more efficient ,all without losing a bit of performance.

Whether you're a machine-learning engineer, a researcher, or someone building products powered by LLMs, getting a good understanding of these optimization techniques is really important.

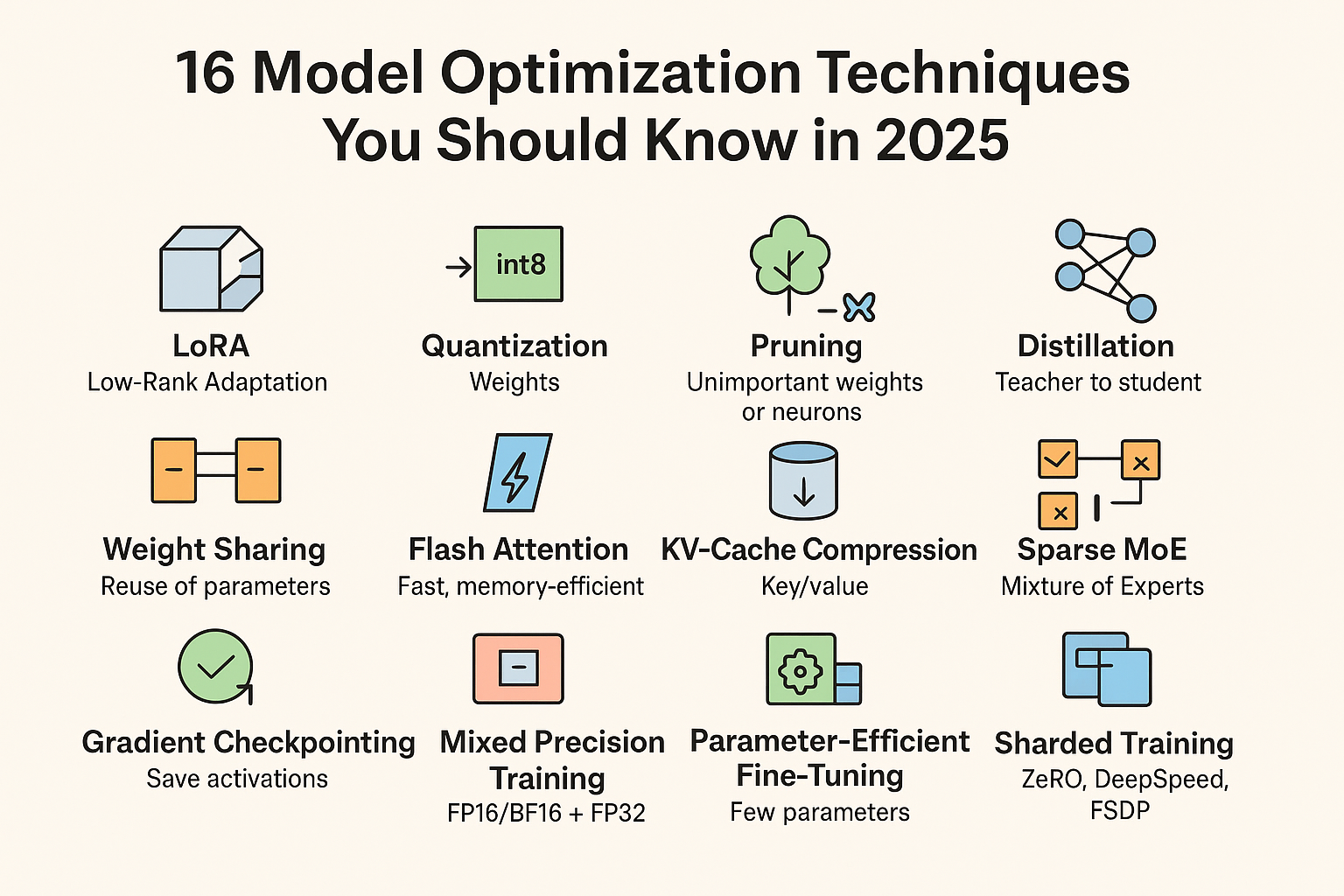

Let's dive into 16 key concepts that are shaping today's modern AI systems.

1. LoRA (Low-Rank Adaptation)

LoRA has really become the go-to method for fine-tuning these massive models. Instead of tweaking every single parameter, it works by just adding in some small, trainable low-rank matrices into the existing model weights. This approach really cuts down on memory usage and makes the training process much faster.

You can kind of picture it like adding a small extension to a house rather than completely rebuilding the entire structure from scratch.

2. Quantization

Quantization is the process of lowering the precision of weights from float32 down to int8, int4, or even int2. This significantly cuts down the model's size and speeds things up, all while keeping most of the original accuracy intact.

It's kind of like compressing an image from PNG to JPEG in the world of AI, making it smaller and faster, yet still very close to the original.

3. Pruning

Pruning is a technique where you get rid of weights or neurons that barely make a difference to the final outcome. This lightens the model and can even make it perform better in some cases.

It's kind of like trimming away dead leaves from a plant so it can survive more effectively.

4. Distillation

Model distillation involves training a smaller "student" model to act like a larger "teacher" model. The student picks up on the teacher’s subtle insights and behavior, hitting high performance levels but with a much smaller footprint.

Think of it as a professor quickly passing down their knowledge to a bright student.

5. Weight Sharing

Weight sharing allows multiple layers in a model to use the same set of parameters, rather than each layer having its own unique weights. This approach is key for models like ALBERT to shrink their size significantly.

Picture several rooms all using the same bookshelf instead of each having their own separate one.

6. Flash Attention

Flash Attention is a smart reorganization of how attention calculations are done. By sidestepping large temporary matrices, it slashes memory use and speeds up training, particularly with lengthy sequences.

It’s basically like tackling a math problem in a clever sequence so you don’t need a massive notepad.

7. KV-Cache Compression

When running inference, transformers keep track of Key/Value tensors for each previous token, and this can use up a lot of resources quickly. KV-cache compression helps shrink this memory usage by using techniques like quantization, sparsity, or deduplication.

This is especially important for models that handle long contexts and for systems that need to process a lot of requests efficiently.

8. Sparse Mixture-of-Experts (MoE)

MoE models have a large pool of “experts,” but only a small number are actually used for each token. This setup allows for a vast amount of representational power while keeping computational costs down.

It’s a bit like having thousands of specialists on call, but only the two most relevant ones are brought in for each task.

9. Gradient Checkpointing

Training big models often requires storing many intermediate activation values. Gradient checkpointing cuts down on memory usage by saving fewer of these values and re-computing some during the backpropagation phase.

The trade-off here is that it takes a bit more computation time in exchange for being able to handle much larger models.

10. Mixed Precision Training

This technique involves training models using a mix of lower-precision formats like FP16 or BF16 alongside higher-precision FP32. Using lower precision makes the training faster and uses less memory, while higher precision helps keep the model’s performance stable.

These days, this approach is pretty much the standard for modern deep learning.