Why the Learning Rate Actually Matters?

If you’ve ever trained a neural network, you will understand the feeling when you stare at a loss curve. You’re hoping for that smooth downward slide, but then it starts bouncing up and down or barely moving at all.

When that happens, nine times out of ten, the learning rate is the troublemaker.

This tiny little number can make your model learn smoothly and effectively or completely lose its mind.

What the Learning Rate Really Does

Imagine the learning rate as the "urgency level" your model uses when it's trying to correct its errors.

When the model makes a prediction, it checks it against the actual answer and tweaks its internal settings. The size of that tweak is determined by the learning rate.

A very small learning rate is like the model saying in a soft voice, "Alright, let me just change this a tiny bit."

A large learning rate is more like telling the model to overhaul everything.

It’s pretty easy to see which approach is the safer bet.

So How Does It Affect Training?

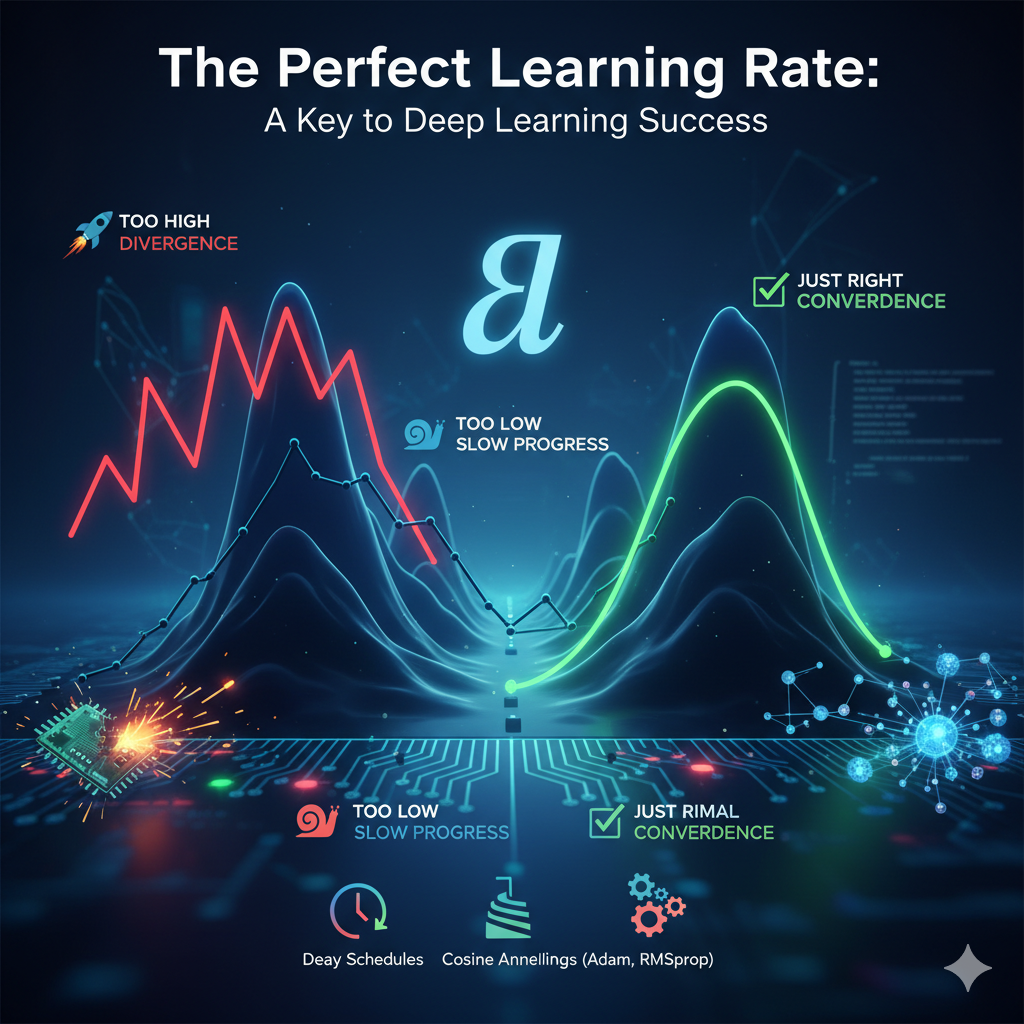

Is the learning rate too high? You'll see your model bouncing all over the place, missing the mark, and the loss graph looking like a wild rollercoaster ride.

On the other hand, if it's too low? Your model moves at a snail's pace, it's technically learning, but it feels like an eternity waiting for any real progress.

Neither of these scenarios is Fun

The Mathematical Formulation

For weight w, gradient ∇L(w), and learning rate α:

Where:

-

= learning rate

-

= direction and size of the correction

-

w new w = the new, hopefully better weight

One simple formula controlling the entire learning process.

Analogy

Let me give an Analogy to better explain the Learning Rate

Imagine you’re trying to climb a hill with a blindfold on.

-

If you take tiny steps, you’ll get to the top, but maybe by next year.

-

If you take huge leaps, you might jump past the peak, tumble downhill, and keep going the wrong way.

-

If you take steady, confident steps, you’ll make smooth, meaningful progress.

That’s exactly what a good learning rate feels like.

Signs Your Learning Rate Is Wrong

When It’s Too High

Loss is bouncing all over the place

Training becomes unstable

Model refuses to settle on good values

- Loss barely decreases

- Training feels like watching paint dry

- Model may settle for a mediocre solution

- Always monitor your loss curve

- Try adaptive optimizers like Adam or RMSProp

- Don’t be afraid to experiment — different models like different LRs